Web Performance Part 2. Speedy Content Delivery with HTTP/2

Post Author:

CacheFly Team

Categories:

Date Posted:

August 13, 2020

Let’s cut to the chase; HTTP/2 is great. It’s definitely not a magic bullet that’s going to put CDNs out of business any time soon, and it doesn’t solve all the problems in the web performance space. However it improves on its predecessor immensely, and its benefits far outweigh its downsides.

Obviously the main benefit that we’re interested in is that it’s faster.

If you haven’t already I recommend a quick visit to the HTTP vs HTTPS site for a good live demonstration of HTTP/2 performance. Just ensure you’re using an up to date browser first.

Measuring the impact of HTTP/2

Depending on the benchmark that you read you could be left thinking that you’ll be getting hundreds of percentage improvement, or possibly making your site perform worse.

The truth is that it depends very heavily on your use case. How your site is structured and how people use it.

On a traditional website, multiple objects are required for a page. Which requires multiple requests to the server to load the page.

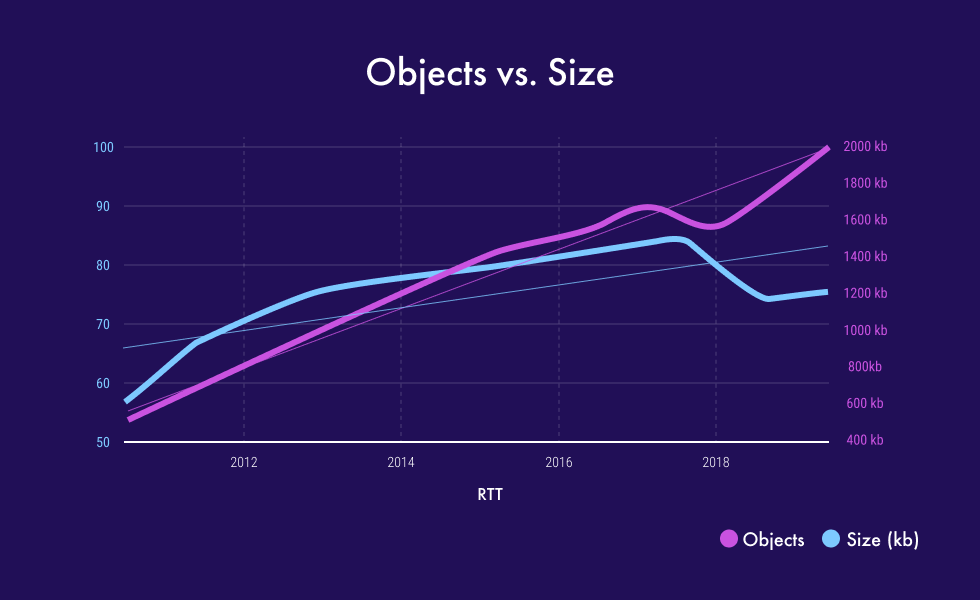

It’s been known for quite a long time that the average number of objects per page is steadily increasing. Which in itself is increasing the average size of a web page. At the same time, the cost of loading all these objects has been increasing the average page load time.

When designing HTTP/2 with the goal of reducing latency, these multiple objects, multiple parallel requests behavior was the key aspect of HTTP/1.1 that needed to be addressed.

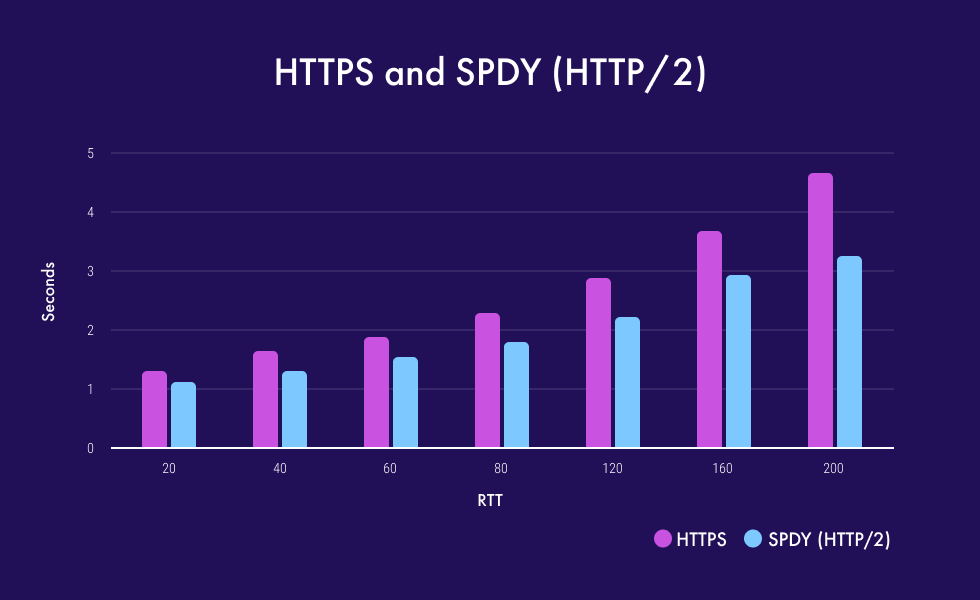

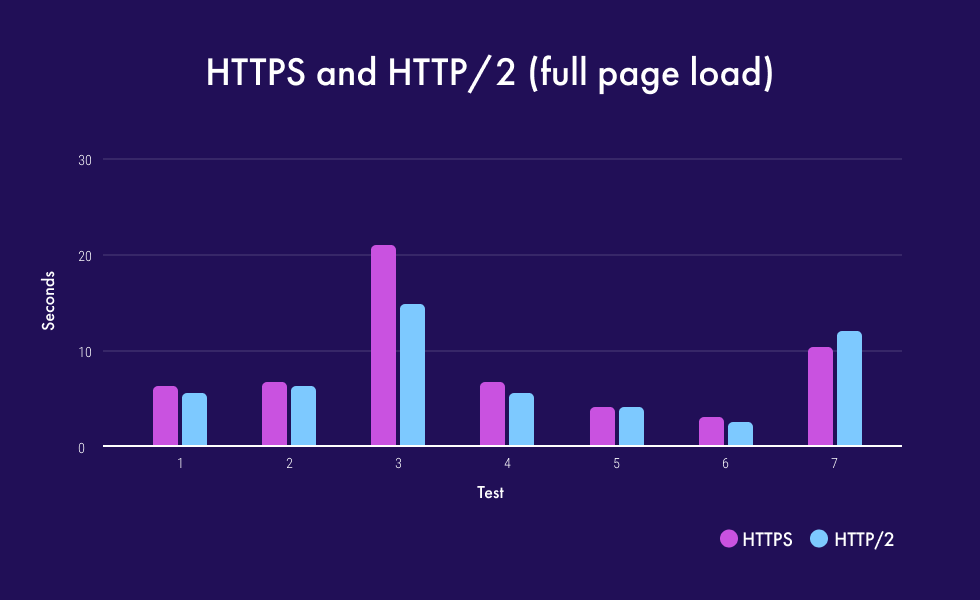

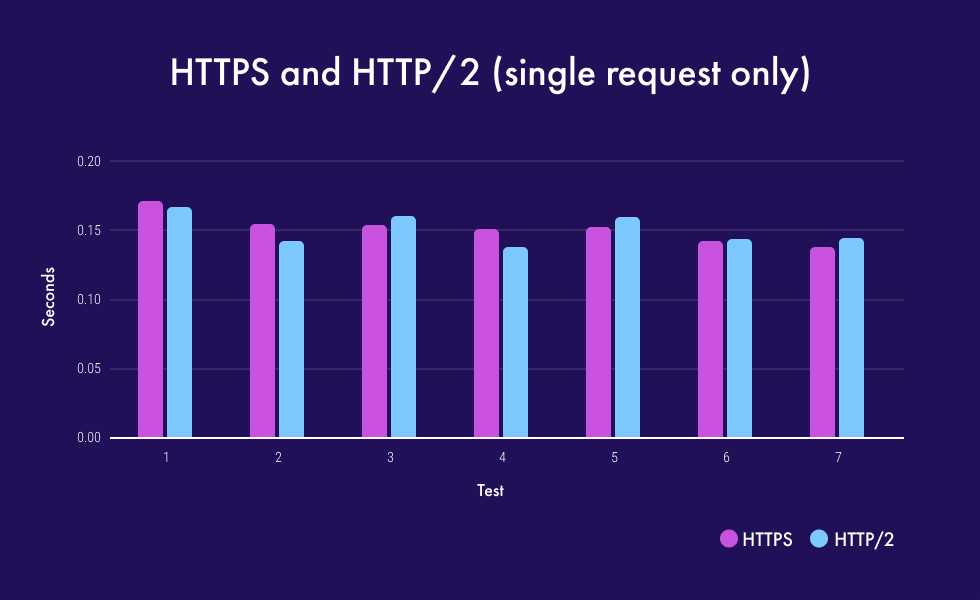

Hence benchmarks that measure full page load on a page which have lots of objects show a big performance boost. Benchmarks that only measure the initial connection and HTML fetch therefore don’t show any boost at all.

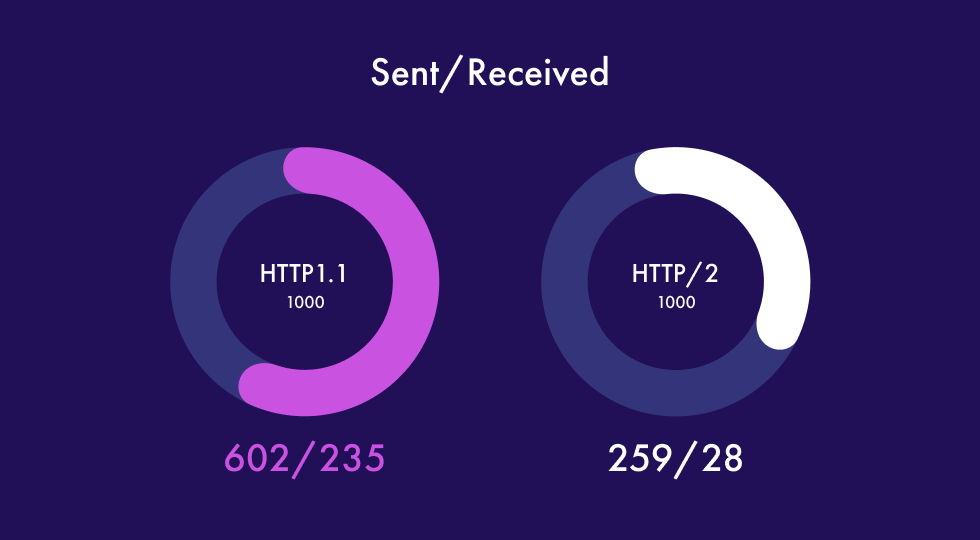

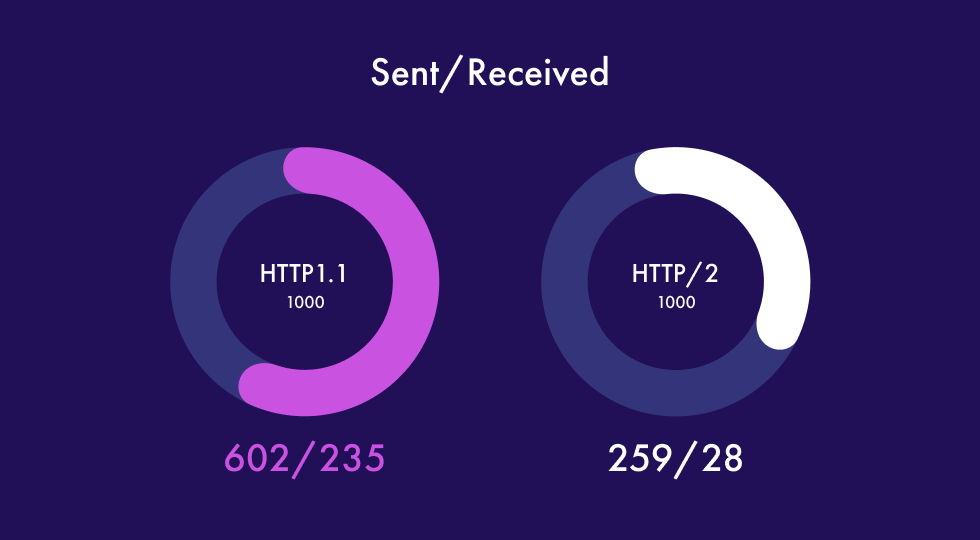

It’s worth pointing out that scales are the same in these two graphs. A single request can take as little as 0.15 seconds over either HTTPS (HTTP/1.1) or HTTP/2. However, a full-page load (which loads all the resources needed for the page) can take several seconds. In some of the test cases, the difference was negligible (and almost removed by averaging). However, in all but one case, HTTP/2 was quicker.

Problems addressed by HTTP/2

As noted above, HTTP/2 is all about improving latency by optimizing the multiple requests use case.

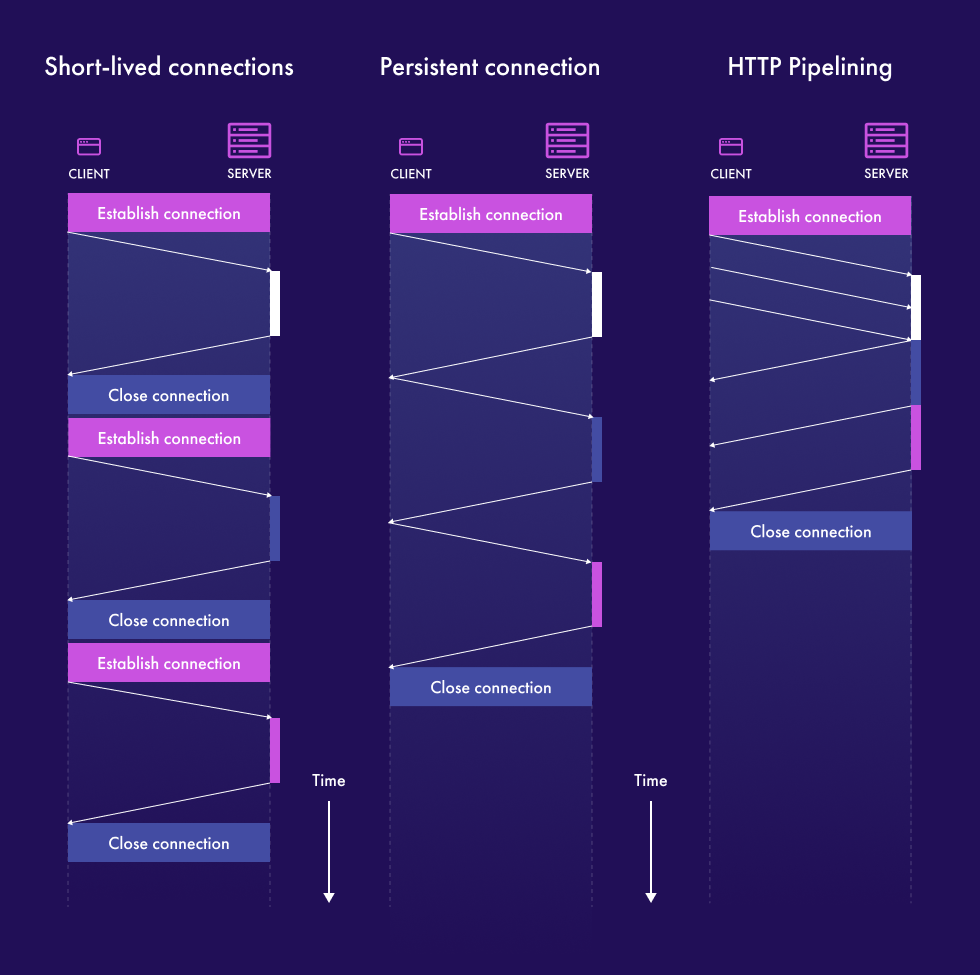

HTTP/1.0 is particularly bad at doing this because the protocol design was extremely simplistic and stated quite clearly that each request has to complete before another request can be made over the same connection. Everything has to be done in sequence and not in parallel. This is a problem called Head Of Line blocking.

The inclusion of this behavior within HTTP/1.0 is to keep backward compatibility with the original version of HTTP released in 1991, which is still largely supported today. Yet it didn’t take long for recognition of this behavior as a problem and a major cause of latency.

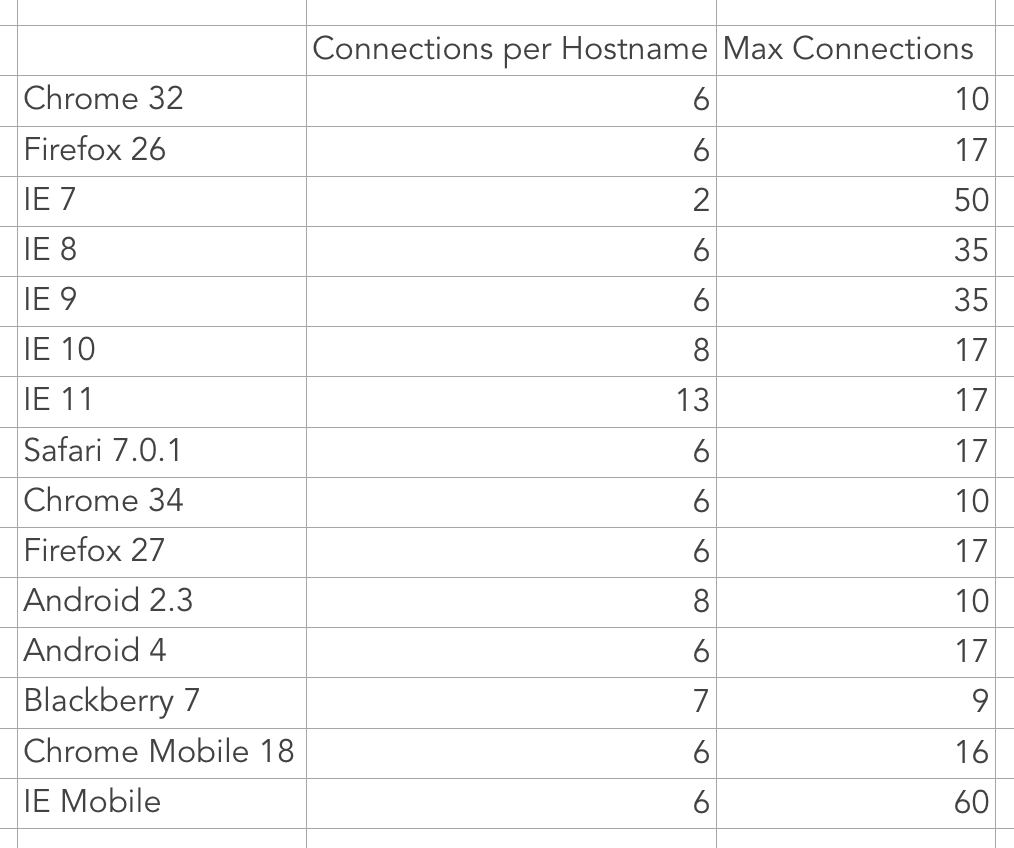

In an attempt to make things more parallel browsers introduced the idea of making multiple connections to a web server. Although not too many so as not to overload the server. With the number of connections being defined by each browser, there’s a lot of variabilities.

Attempts to fix this were made with HTTP/1.1 in 1999 but ultimately they resulted in features that didn’t quite cut it. The idea of persistent connections, where the TCP connection can be reused multiple times has worked out well and is in widespread use, especially over HTTPS where new connections are time-consuming to create.

Although it’s quite surprising that servers and clients don’t take advantage of the pipelining, there are two major problems which are holding it back.

The first problem with pipelining is buggy and incompatible implementations of systems that either serve or intercept it. The HTTP interceptors, which are often virus scanners, are the most common cause of incompatibility issues. These pieces of software advertise and attempt to talk HTTP/1.1 but when faced with a pipelined request simply cause connections to fail. As a result browser implementers have found that enabling pipeline causes a significant rise in errors, through no fault of their own.

The second problem is that when browsers queue up one request behind another one, they don’t know how long the first request will take. If the browser happens to request the slow and unimportant objects before the small vital objects, there’s still a long delay before the page is fully loaded. This has led to complex algorithms that can choose to reschedule a request on to a different TCP stream; which although reducing latency causes an increase in wasted bandwidth.

Solutions provided by HTTP/2

HTTP/2 fixes all of these issues, and some others I don’t really have the space to discuss here, by completely reworking the HTTP specification.

- HTTP/2 ditches the idea that the on the wire packets and the TCP stream should be written in a human-readable ASCII and instead introduces Binary Framing similar to that used in Ethernet, IP, and TCP itself. This is good because small, predictability sized frames in a binary-encoded format are more efficient to process.

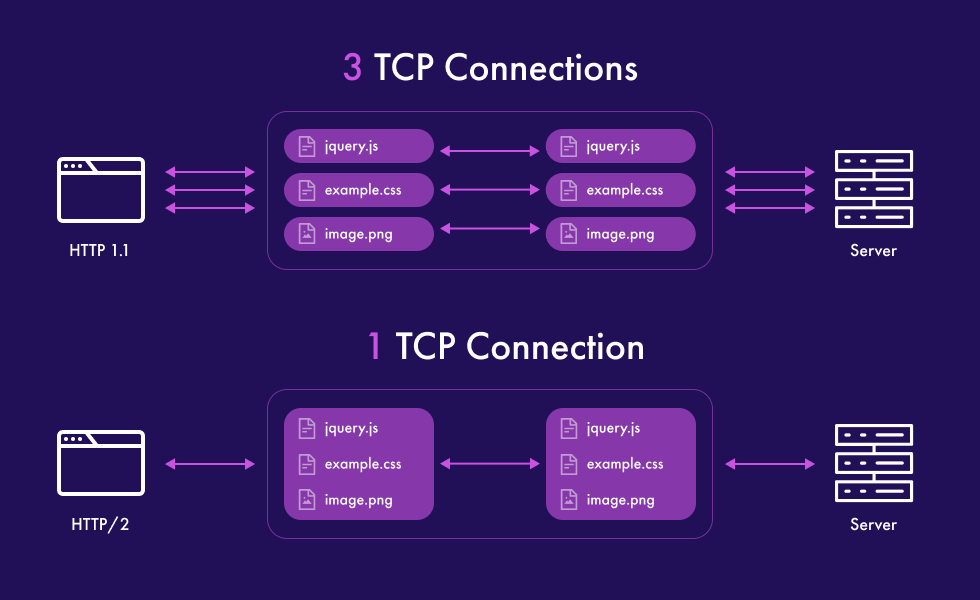

- HTTP/2 eliminates the HTTP head of line blocking problem by introducing the ability to multiplex independent streams of data over the single TCP connection. Through doing this the TCP (and TLS) overhead is amortized over all the requests for a given hostname. On the Firefox blog, Patric McManus reports that connection reuse goes from 25% to 75% with HTTP/2 being enabled.

- HTTP/2 introduces header compression which according to Google can contribute 80% reduction in the use of network bandwidth for protocol overhead, which on slow connections produces large latency reductions.

- HTTP/2 introduces additional metadata and control signals which allow the browser and the server to understand things such as prioritization, dependencies, and flow control.

Although HTTP/2 does a great job, it’s not without its faults. The main feature of multiplexing requests over TCP provides a huge benefit compared to the problems experienced previously. Yet TCP is in itself notoriously difficult to optimize and, ironically, also suffers from a head of line blocking problem (similar to the one which HTTP/2 set out to fix). By itself, this functionality may not have been enough, but when combined with the other features of HTTP/2 we have a protocol that I won’t hesitate to recommend, a bar in a few specific circumstances. CacheFly has been providing HTTP/2 for all customers since 2018 and is proud to be ensuring that we’re always providing the best possible technology.

Product Updates

Explore our latest updates and enhancements for an unmatched CDN experience.

Book a Demo

Discover the CacheFly difference in a brief discussion, getting answers quickly, while also reviewing customization needs and special service requests.

Free Developer Account

Unlock CacheFly’s unparalleled performance, security, and scalability by signing up for a free all-access developer account today.

CacheFly in the News

Learn About

Work at CacheFly

We’re positioned to scale and want to work with people who are excited about making the internet run faster and reach farther. Ready for your next big adventure?