Web Performance Part 1. Bandwidth, Throughput and Latency

Post Author:

CacheFly Team

Categories:

Date Posted:

July 23, 2020

It should be no surprise that improving website performance and efficiency are at the heart of every content delivery network. We’re always looking for ways to improve delivery speed.

Not just optimizing for the lowest time to the first byte, but ensuring that we keep the time to last byte as low as possible. This is increasingly important to use eCommerce cases where research has repeatedly found that slow load times reduce sales conversion rates. According to Business News Daily, 90% of shoppers polled said they left an e-commerce site that failed to load fast enough. 57% of respondents said that they purchased products from a similar retailer after leaving a slow website, while 40% said they went to Amazon instead. Almost one-fourth of online shoppers said they never returned to slow e-commerce sites. A Forrester research study offers an analysis of consumer reaction to the poor online shopping experience. In essence, page load time heavily impacts the bounce rate.

These metrics are also crucial in avoiding penalties issued by major search engines and browsers. A search engine considering a site as slow can reduce visitor traffic. A browser expecting a site to load slowly can introduce an unexpected and ugly loading screen, reducing the site’s users’ overall quality of experience. In July of 2018, Google “Speed Update” rolled out for all users and have announced they may add a “badge of shame” for slow-loading sites on Chrome.

These metrics aren’t the whole story with use cases such as video delivery and real-time user-generated content. In both cases, the ongoing ability to consistently and reliably deliver large volumes of material to longer-lived sessions can often be just as important as the time to load the original content.

A busy network with lots of users who all share resources won’t always have enough bandwidth to consistently deliver content as quickly as it may have done in the past. Especially if one of the users on the network is demanding more throughput than usual, it can become overwhelming to the point where it cannot provide service to all of its users. Ionos explains this “noisy neighbor” effect and some possible solutions on their blog.

This is the essence of the difference between latency, bandwidth, and throughput.

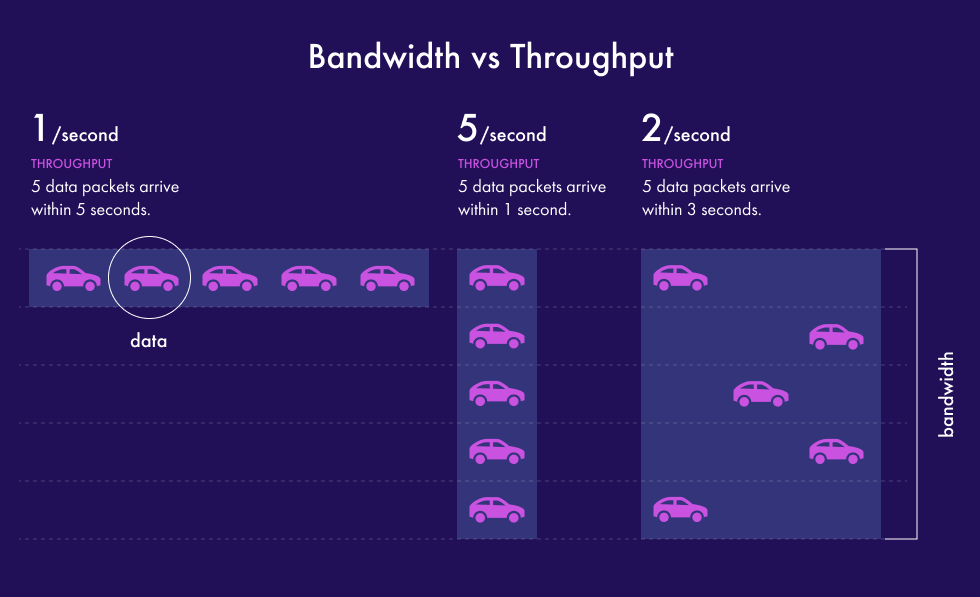

Latency is how quickly something can be done, in our discussion, the time it takes for data to travel between the user and the server plus the time it takes the server to process it.

Bandwidth is the maximum capacity of the network, which is not just about the transfer network’s size but also about the processing capacity of the servers.

Throughput is the measurement of how much data is exchanged between the user and the server, over a given period.

Although optimizing for throughput is the right call, you can only control bandwidth and latency.

Additionally, optimizing either bandwidth or latency alone won’t work.

For example, when you’re using all of your internet connection bandwidth, upgrading your server won’t make the website faster.

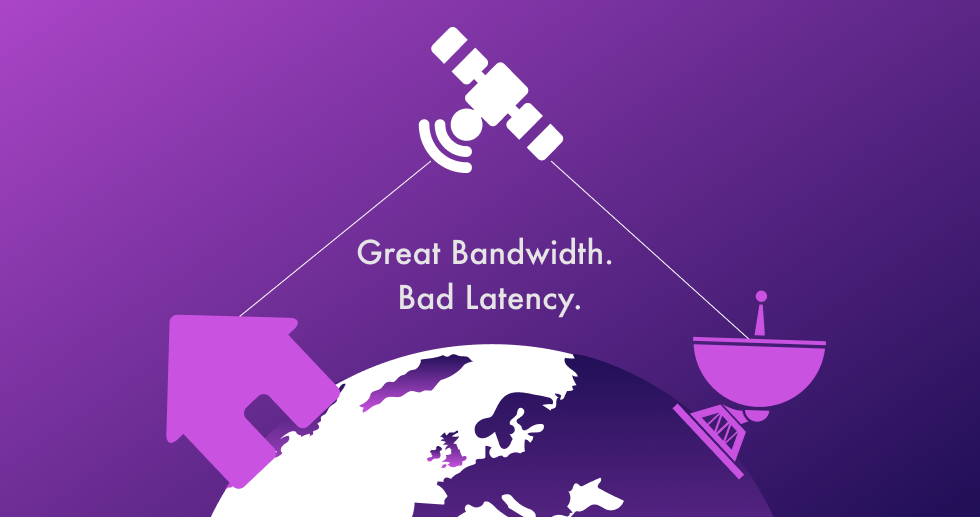

Similarly, upgrading the internet connection by switching it from a cable in the ground to a traditional satellite link may significantly increase the available bandwidth, but at the same time is likely to significantly worsen latency (because satellites are far away).

We are achieving good throughput results from having both; more than sufficient bandwidth, and low (good) latency.

Improving Bandwidth

When it comes to bandwidth, more is better. Improving available bandwidth is perhaps the easiest to do. It often comes down to buying more servers, buying more capacity from network providers, or strategically setting up peering agreements (if you’ve got your autonomous network which enables you to do that).

Although I shouldn’t be so quick to dismiss the other options, most of these are advantages that you can have your delivery network enable for you;

- Increase cache expiry timeouts, so that content stays in users’ browsers for longer.

- Use compression on all requests, so that you transmit fewer bytes.

- Use automatic real-time image and video optimization technology.

- Pre-optimize resources that can’t be optimized on the fly. Put all your HTML, CSS, and Javascript through a webpacker and tree shaker. However, I wouldn’t go so far as completely concatenating and inlining all your resources, although that’s great for HTTP/1.1 it will likely hurt you when it comes to clients using the newer HTTP/2 and HTTP/3 protocols.

The principle highlighted by all of these is that by only sending the data you need to, you ensure that bandwidth isn’t being wasted, which gives you more bandwidth to use. For example, there is no need to stream ultra high definition 4K video to a device with a screen that only displays standard definition. We discussed images killing your speed in a previous blog post.

As a final note, be sure to optimize content for the bandwidth that your target audience has available to them, as this varies widely in different parts of the world.

Thinking about bandwidth in terms of overall website capacity.

It’s quite common (and accurate) to think of bandwidth as just the available bandwidth within the network. Yet we’re interested in having fast websites, not just fast systems. So it’s good to think about the overall capacity for your website as your available website bandwidth. This leads us to want to consider questions, such as;

- Should I have more than one server? (yes)

- Are my servers able to handle enough simultaneous requests to max out my network bandwidth?

- Are my load balancers able to process data packets quickly enough for me to be able to max out my network bandwidth?

- Are my servers configured to use all of their hardware to its full potential?

- Where is the most commonly hit bottleneck?

- Should I move my origin servers into a data center that is closer to my CDN?

- Can I avoid the need for an origin by running on my workload on auto-scaled edge servers?

Of course, bandwidth and latency are related metrics, so many optimizations for bandwidth will also reduce latency. Yet you need to be careful as some bandwidth optimizations, such as on the fly compression, can increase latency due to the additional processing required for each request. The decision as to whether a specific optimization is worth it can often be dependent on how it’s implemented and the type of workload that it is being applied to.

Improving Latency

When it comes to latency, less is better. You always want things to take less time.

Improving latency is more complicated; there are many options, some easier than others. Some of the ways we ensure low latency at CacheFly are;

- Bringing servers and content closer to users, which is why every content delivery network in the world is very proud to show you their map of servers worldwide, just like in CacheFly’s network map.

- Maintaining a well-connected network with multiple network paths available everywhere. You don’t waste valuable time using systems that are down or out of bandwidth, which is why CacheFly Added Peering.

- Always switching traffic to the lowest contended network links in real-time. The fewer other people using a relationship, the more of it can be used for your traffic. Cachefly pioneered TCP Anycast in 2002 and is continually improving its BestHop routing technology;

- Optimizing the network protocols on top of which the web runs. While keeping compliance with the standards to ensure interoperability, there are many options that can be tuned and tweaked. Choosing to use a proprietary implementation such as ZetaTCP can automatically tweak network protocols to ensure the lowest latency.

- Keeping requests for predictable, static content away from origin servers to have more bandwidth available for dynamic content generation. Fewer requests and more bandwidth means that requests spend less time waiting in a backlogged queue before being served; check out how Origin Shield Maximizes Your Security

- Correctly using the latest and fastest versions of the underlying technology which powers the web.

Note that newer is almost always faster. Staying on old software for compatibility will ultimately hurt your performance in the long run, as you’ll be missing out on performance enhancements that everyone else is taking advantage of.

There’s far too much detail to discuss to fit into this article; I’ll discuss the essential technologies to keep up with future articles. Or, if you’re using a CDN, you should expect them to be doing this on your behalf.

Bandwidth vs. Latency

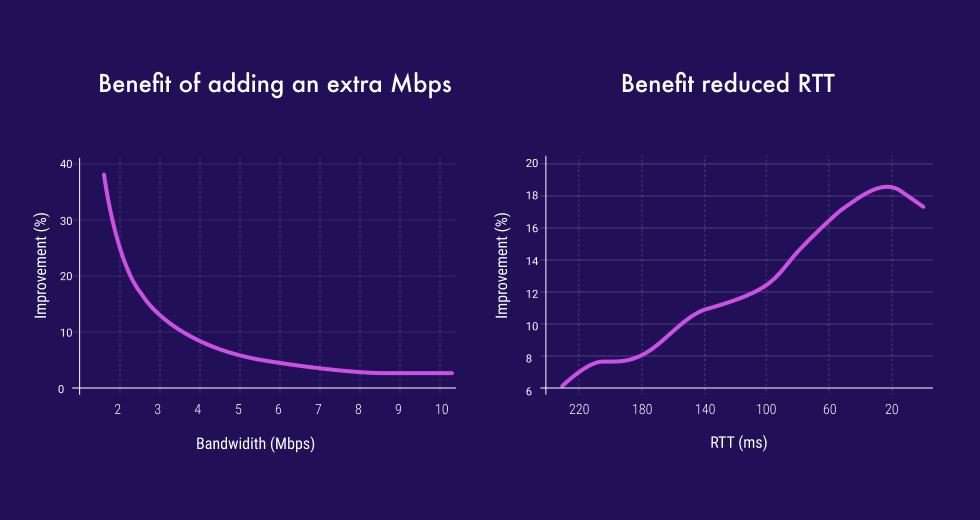

In considering all the options, it’s certainly reasonable to ask where you should start and which you should optimize first. Although I’m keen to point out that it’s not a straightforward comparison, it’s also indisputable that you’ll always get the best results from improving latency.

In 2010 a web performance engineer called Mike Belshe published research, which showed that improving bandwidth has diminishing returns the more you optimize, but reducing latency always helps.

Product Updates

Explore our latest updates and enhancements for an unmatched CDN experience.

Book a Demo

Discover the CacheFly difference in a brief discussion, getting answers quickly, while also reviewing customization needs and special service requests.

Free Developer Account

Unlock CacheFly’s unparalleled performance, security, and scalability by signing up for a free all-access developer account today.

CacheFly in the News

Learn About

Work at CacheFly

We’re positioned to scale and want to work with people who are excited about making the internet run faster and reach farther. Ready for your next big adventure?